Human Machine Interaction

Humans interact with computers in many ways; and the interface between humans and the computers they use is crucial to facilitating this interaction. Desktop applications, internet browsers, handheld computers, and computer kiosks make use of the prevalent graphical user interface (GUI) of today. Voice User Interface (VUI) are used for speech recognition and synthesising systems, and the emerging multi-modal and gestalt User Interfaces (GUI) allow humans to engage with embodied character agents in a way that cannot be achieved with other interface paradigms. The growth in Human-Computer Interaction field has not only been in quality of interaction, it has also experienced different branching in its history. Instead of designing regular interfaces, the different research branches have had different focus on the concepts of multimodality rather than unimodality, intelligent adaptive interfaces rather than command/action based ones, and finally active rather than passive interfaces

The Association of computer machinery defines human-computer interaction as “a discipline concerned with the design, evaluation and implementation of interactive computing systems for human use and with the study of major phenomena surrounding them”. An important facet of HCI is the securing of user satisfaction (or simply End User Computing Satisfaction). “Because human–computer interaction studies a human and a machine in communication, it draws from supporting knowledge on both the machine and the human side. On the machine side, techniques in computer graphics, operating system, programming language, and development environments are relevant. On the human side, communication theory, graphic and industrial design disciplines, linguistics, social science, cognitive psychology, social psychology, and human factors such as computer user satisfaction are relevant. And, of course, engineering and design methods are relevant. Due to the multidisciplinary nature of HCI, people with different backgrounds contribute to its success. HCI is also sometimes referred to as human–machine interaction (HMI), man–machine interaction (MMI) or computer–human interaction (CHI).

After the decades of research in HMI the future is approaching to us much sooner. Following are the some of the latest Blizzard in the above mentioned filed that are redefining the meaning of sensors and input devices.

Wearable Gesture sensor

Fig. shows muscle signals Fig. man use myo-arm band to control quad copter

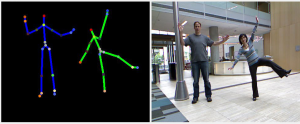

3D Depth sensor and posture Recognition

Recent development in computational photography and Computer vision as made it possible for camera to capture the 3D depth of an image with tracking of posture and skeletal mapping. This application can be used to design gesture controlled application and robotics.

Figure shows the skeletal map of the posture

Robot Mask

Courtesy AAI Labs Tsukuba University, Japan

We have been developing the “robot mask” to support rehabilitation for hemi-facial paralysis. Facial expressions, which are controlled by the electrical signals sent through the facial nerve; the seventh (VII) of 12 paired cranial nerves, are produced by the actions of the facial muscles. However, due to various medical conditions, these voluntary muscle activities can sometimes degrade or even disappear, resulting in facial paralysis. Although the available medical support can speed up the healing process as temporary paralysis sometimes can last up to three to four years, it is necessary to have other means to provide immediate relief. Despite the advancement of biomedical engineering, not much effort has been made to address the facial paralysis, in terms of robotics.

The main target of bioelectrical signal-based control is to obtain seamless control of artificially generated facial expressions and support the timing of facial message signs needed for interpersonal communication. By acquiring bioelectrical signals from the contralateral side of a hemifacial paralysed person and using them to actuate the artificial muscles of the ipsilateral side, we aim to artificially correct the baseline facial muscle tone, and to minimise the drooping of the ipsilateral face.

Emotion Mask

Courtesy AAI Labs Tsukuba University, Japan

Facial expressions play a significant role in interpersonal information exchange by providing additional information about the emotional state or intention of the person displaying them. The traditional approach to recognizing emotional facial expression uses video and photographic cameras and subsequently computer vision algorithms to identify facial expressions. These methods however require a camera directed at the person’s face and have little tolerance against occlusion or changes in lighting conditions or camera angles.

The goal of this research is to develop an emotional communication aid to improve human-human communication. The Emotion Reader has applications in several areas, especially in therapy and assistive technology. It can be used to provide biofeedback to patients during rehabilitation and a quantitative smile evaluation during smile training. Additionally it can aid the visually impaired: the listener is able to perceive the speaker’s facial expressions, through alternative forms of communication such as audio or vibro-tactile stimulation. It is also a tool in e-learning, distance communication and computer games because it can transmit the facial expression automatically without the need of high bandwidth. Another application lies in increasing the quality of life for patients suffering from facial paralysis, where the signals obtained from the healthy side of the face can be used to control a robot mask that produces an artificial smile on the paralyzed side.

By: Anant Kumar Shukla

Email: anantshukla42@gmail.com